Blur Does Not Equal Antialiasing

In HUGI #12 and #13, there was an article with info on "antialiasing" a picture. The method worked by averaging adjacent pixels to reduce the jagged edges inherent in digitized pictures. Sometimes this was correctly called "blurring", but sometimes it was also referred to as "antialiasing". These are two quite different things, however.

In all digital signals, audio, picture or otherwise, we run into the effects of sampling and quantization. The latter we will not touch here. As for the former, it is well known (by the sampling theorem of Shannon) that given a continuous function (which all digitized pictures and sound signals attempt to represent), it can be, under one condition, recovered exactly from its samples at regular intervals (with pictures, in units of length; with sound, in units of time; with animations, in units of space-time). The condition is that no frequencies (in space, time or space-time, respectively) above half the sample rate are present. If such frequencies are present, nevertheless, they will fold to drop between half the samplerate (the Nyquist frequency) and zero. They manifest themselves as distortion.

The procedure of removing (or reducing) such frequencies prior to sampling is referred to as antialiasing. Antialiasing should be applied on resampling, as well, since ideal resampling is equal to perfect continuous reconstruction and then sampling at the new rate. Essentially, both antialiasing and reconstruction (also called anti-imaging) equal lowpass filtering. One (rather unsophisticated) form of lowpass filtering is, indeed, finite averaging (i.e. blurring, in case of pictures). If a moving average is used, good. If a regular grid is imposed and averaging by grid square is done, "pixelation" results. The first is proper, shift-invariant filtering, the latter lowpass filtering, but with a highly shift-variant filter. The first is often preferable, although there is some psychological backing for the latter if pictures are concerned. (It really amounts to approximating the amount of energy falling inside a given grid square; clearly closer to the operation of the human retina. Also works better with time-frequency decompositions...) If (near) perfect lowpass filtering is done (using convolution with a windowed sin(x)/x function, for instance), very good results will emerge. If a lower order filter is employed, the result is still considerably better than nothing.

What's the difference between what I called "blurring" and "antialiasing" above, then? The difference is in whether the lowpass filtering operation is applied before or after the (re-)sampling step. As alias results from sampling, the sampling effects cannot be avoided by blurring. Rather, blurring results in an approximation to first aliasing the picture to the current resolution, then properly resampling it to a still lower resolution and then properly upsampling the result to the current resolution. Effectively, we cause distortion and then fix it by using a resolution lower than the screen resolution. If fine detail (high frequencies) is present in the original signal, blurring will only make the result more cluttered, whereas antialiasing will leave at least part of the detail visible.

Blurring looks better than nothing, however. This is because the conceptual resampling step in drawing lines and other common geometrical objects is concerned with a rather benign resampling ratio - all the objects drawn are at least 1 pixel wide and so do not have considerable high frequency content (there is no significant detail at the 1/10 pixel level, for instance). This means that aliasing is not a big problem and the frequency foldover will mainly happen on a one time basis (mirroring through the Nyquist frequency), not more (say, seven times: first from Nyquist, then zero, then Nyquist etc.). Lowpass filtering will then reduce the high end present in the picture and thus kill most of the alias. The high end which should be there, goes too, of course. The result is almost properly antialiased but lacking in high end - blurred. (This is not exactly true, of course. See the comments after the pictures...)

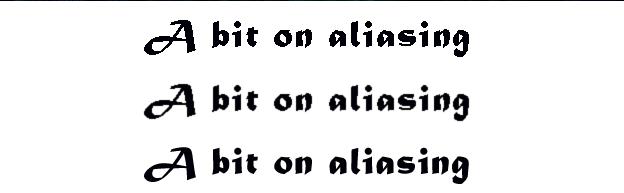

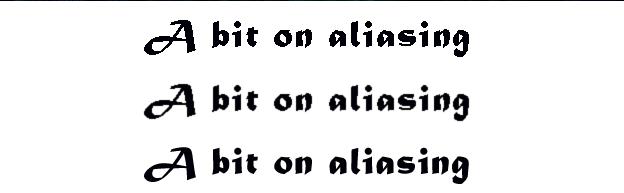

The problem is best seen by viewing an example picture with some text in it. The examples have been created with Photoshop 4.0, as it does a good job with antialiasing the fonts. (And a surprisingly good job at resizing pictures, too.) The first picture gives some text on a white background. Antialiasing is not used so jaggies are evident on the edges. The second picture shows the effect of slightly blurring the result. Jaggies become less noticeable, but if viewed from afar, they are still visible. The third picture shows the effect of proper low order antialiasing. Jaggies cannot be avoided completely, but it is evident that when looking at a distance, less jaggies show through. Also, the finer detail in the fonts is less cluttered.

For the knowledgeable, a bit more has to be said about why blurring does not correct the situation even if we consider our final picture be in a lower-than- screen resolution. The reason is that when we draw a line (with Bresenham's algo, for instance), we not only forget to antialias it, but also draw the nearest pixel to the real one at this resolution. This is really a shift-variant, nonlinear filtering operation, which moves the real pixel to the closest available one. Since this is nonlinear, we know that now new frequencies _can_ arise in the resulting signal. This happens, too - it is seen in jaggies. In a line with a gentle slope, proper antialias would gradually shift the weight from one pixel row to another, creating smoothly shaded lines on both sides of the actual pixel position. Blurring, however, sees only the line which already has alias and rounding distortion, and blurs to a lower resolution from there. This is seen in that, first, the line seems to widen and, second, in that the shaded pixel rows form on both sides of the line and display even pixel values everywhere but in the immediate vicinity of a jaggie.

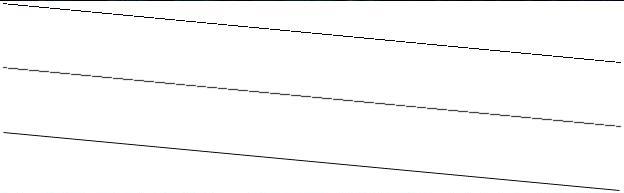

These effects are seen in the three following pictures of a one pixel wide line. The first line is drawn with no antialiasing. Jaggies happen. Second, a blur is applied: widening and some smoothing of corners is seen, of course. The third line is properly antialiased - the width is closer to the first line than to the second and on a closer look, the proper interpolation values on the two sides of the actual line position are seen. Better, eh?

In conclusion, if you need an effect, use blur. If you need proper antialiasing, use something else (e.g. mipmaps for pictures, rendering at a higher resolution and downsampling properly, incident area estimation and alpha blending or summing of windowed sin(x)/x curves for each pixel produced). Or simply go to a higher resolution. Who ever said lowres and antialias was simple or efficient?

decoy//dawn <decoy@iki.fi>

student/math/Helsinki university

http://iki.fi/~decoy/edecoy.html